1. Introduction

Kubernetes has been the most widely used tool for container orchestration for quite some time. Checkmk supports you in monitoring your Kubernetes environments.

As of version 2.1.0, you can use Checkmk to monitor the following Kubernetes objects:

Clusters

Nodes

Deployments

Pods

DaemonSets

StatefulSets

For a complete listing of all of the check plug-ins available for monitoring Kubernetes, please see our Catalog of Check Plug-ins.

1.1. Getting started with Kubernetes monitoring

For an introduction to the new monitoring of Kubernetes, we recommend our two videos Kubernetes Monitoring with Checkmk and Detecting issues and configuring alerts for Kubernetes clusters.

1.2. Structure of the monitoring environment

Since Kubernetes clusters can quickly undergo major changes in terms of the number and location of individual components, we recommend creating a separate site for monitoring your Kubernetes environment. You can then connect this site to your central site as usual via distributed monitoring.

1.3. The process of monitoring Kubernetes in Checkmk

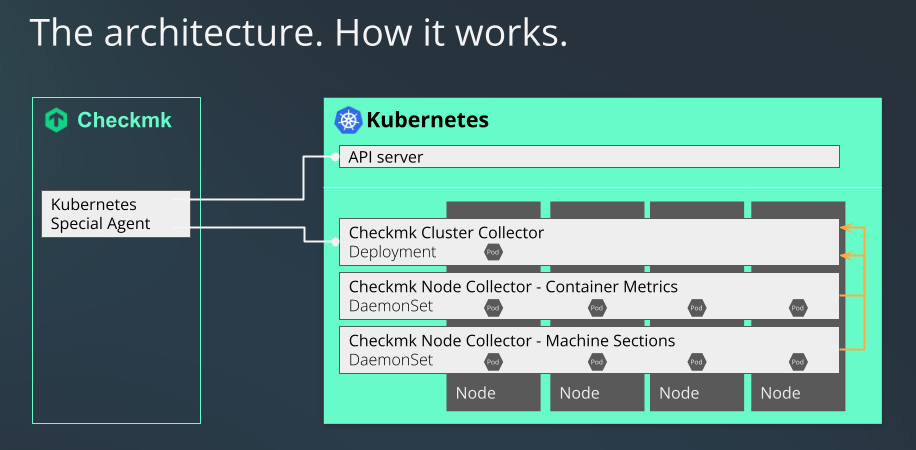

Checkmk monitors your Kubernetes clusters in two ways:

The Kubernetes special agent simply retrieves basic information via your cluster’s API server. This can already be used to retrieve the states of nodes and containers. Most of the metadata of your pods and deployments are also obtained in this way.

For a comprehensive monitoring, however, something is still missing up to this point. Questions such as how much load a particular deployment generates on the CPU, or how much memory a DaemonSet is currently using cannot be answered in this way.

This is where our Checkmk Node Collector and our Checkmk Cluster Collector come into play.

These are an indispensable part of Kubernetes monitoring within Checkmk.

A not insignificant part of what follows in this article is thus also about installing and setting these up.

In addition, the use of the Kubernetes dashboards in the Checkmk Enterprise Editions only makes sense if the Node and Cluster Collectors can provide data on the loads for this.

1.4. Differences compared to other monitoring in Checkmk

When monitoring pods and replicas in your Kubernetes clusters, status changes or delays sometimes occur much more frequently. To account for this, the checks for certain states of these objects only change their status in Checkmk after 10 minutes.

1.5. Differences compared to existing Kubernetes monitoring

Kubernetes monitoring in Checkmk has been rewritten from scratch. The scope of the data that can be monitored has grown considerably. Since the technical basis for Kubernetes monitoring in Checkmk 2.1.0 is fundamentally different, it is not possible to transfer or even rewrite previous monitoring data from your Kubernetes objects.

2. Creating prerequisites in the cluster

To be able to monitor your Kubernetes cluster in Checkmk, first create the prerequisites in your cluster. First and foremost, tell the cluster which pods/containers to deploy and how to configure them.

2.1. Setting up the Helm repository

The installation of Kubernetes monitoring is done with the help of the helm tool.

Helm is also suitable for less experienced users and standardizes the management of configurations.

Helm is a kind of package manager for Kubernetes.

If you are not yet using Helm, you can usually get it from your Linux distribution’s package manager or from the Helm project website.

You can use Helm to include repositories as sources and easily add the Helm charts that these contain to your cluster in the same way as packages.

First of all, identify the repository.

In the following example, we use the name checkmk-chart to make it easier to access the repository later.

You can, of course, also use any other name of your choice:

user@host:~$ helm repo add checkmk-chart https://checkmk.github.io/checkmk_kube_agentWe update our Helm charts whenever new developments in Kubernetes require it.

It is therefore worth checking from time to time whether new versions are available in the repository.

If you have named your local copy of our repository checkmk-chart, as in the previous command, you can use the following command to display all versions of the charts available in the repository:

user@host:~$ helm search repo checkmk-chart --versions

NAME CHART VERSION APP VERSION DESCRIPTION

checkmk-chart/checkmk 1.2.0 1.2.0 Helm chart for Checkmk - Your complete IT monit...

checkmk-chart/checkmk 1.1.0 1.1.0 Helm chart for Checkmk - Your complete IT monit...

checkmk-chart/checkmk 1.0.1 1.0.1 Helm chart for Checkmk - Your complete IT monit...

checkmk-chart/checkmk 1.0.0 1.0.0 Helm chart for Checkmk - Your complete IT monit...If a newer version is available you can update with helm repo update.

2.2. Customizing the configuration to your environment

Since we cannot know in advance how your Kubernetes cluster is structured, we have chosen the safest variant for how the Cluster Collectors are started: By default, you do not provide any ports that can be accessed remotely. In order to be able to access the collectors later, you will need to adapt these settings to your particular cluster.

We support two communication paths by default: the query via Ingress and the query via NodePort. The configuration of these will vary depending on which variant you support in your cluster.

In order to be able to determine certain parameters yourself across all configurations, you include a control file, the so-called values.yaml.

There are two ways to create such a values.yaml.

You can either extract the file supplied by us in the Helm charts and edit it, or you can simply create a minimal version yourself.

Whenever you want to deploy changes to this file in your cluster, you can again use the Helm chart installation command that we will cover later in this article.

Creating your own basic values.yaml

You can create a values.yaml in which you only enter the values that you want to modify.

In our Helm chart, for example, the Cluster Collector’s service type is set to ClusterIP by default.

If you now only want to change this service type to NodePort and the port to 30035, it is sufficient to simply create a values.yaml as follows:

user@host:~$ echo 'clusterCollector: {service: {type: NodePort, nodePort: 30035}}' > values.yamlAn activation of Ingress could look like this:

user@host:~$ echo 'clusterCollector: {ingress: { enabled: true }}' > values.yamlExtracting values.yaml from Helm charts

The complete values.yaml supplied by us can be easily extracted with the following command:

user@host:~$ helm show values checkmk-chart/checkmk > values.yamlYou can now adapt the file created in this way to suit your needs and pass it to helm with the -f values.yaml parameter during the installation, or during a later upgrade.

Providing communication via Ingress

If you use Ingress to control access to your services, edit the already prepared parts in values.yaml accordingly.

For a better overview, only the relevant part is shown in the following abbreviated example.

Set the parameter enabled to true.

Adapt the remaining parameters according to your environment:

ingress:

enabled: true

className: ""

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

hosts:

- host: checkmk-cluster-collector.local

paths:

- path: /

pathType: Prefix

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.localProviding communication via NodePort

You can also provide access to the services directly via a port.

This is necessary if you do not use Ingress.

In the following example, only the relevant section is shown.

You set the value type to NodePort and remove the comment for the value nodePort:

service:

# if required specify "NodePort" here to expose the cluster-collector via the "nodePort" specified below

type: NodePort

port: 8080

nodePort: 30035Configuring the Cluster Collector for HTTPS

If you want to switch the communication with and between the Cluster Collectors to HTTPS, you must also make changes in the values.yaml file.

Below is the section in our supplied values.yaml that you need to edit to enable HTTPS:

tlsCommunication:

enabled: false

verifySsl: false

# clusterCollectorKey: |-

# -----BEGIN EC PRIVATE KEY-----

# XYZ

# -----END EC PRIVATE KEY-----

# clusterCollectorCert: |-

# -----BEGIN CERTIFICATE-----

# XYZ

# -----END CERTIFICATE-----

# checkmkCaCert: |-

# -----BEGIN CERTIFICATE-----

# XYZ

# -----END CERTIFICATE-----In the lines beginning with enabled or verifySsl, you must replace false with true.

Next, remove the hash marks before the three sections clusterCollectorKey, clusterCollectorCert and checkmkCaCert and insert the corresponding data following these.

Your organization should determine whether to use self-signed certificates or to obtain certificates from a Certificate Authority (CA).

Please note that the certificates must fulfill the following requirements:

The CA certificate must contain the host name or the name of the Ingress as FQDN.

For the server certificate, the FQDN must correspond to the following pattern:

<service_name>.<namespace>.cluster.local.In the

[ v3_ext ]section of the configuration file for generating your Certificate Signing Request, thesubjectAltNamemust match the following pattern:subjectAltName: DNS:<service_name>.<namespace>.cluster.local, IP:<service ip>

Using your own service account

Using our Helm charts, a service account would be created in your cluster by default.

If you already have a suitable service account, it is sufficient to add it into the values.yaml and suppress the creation of a new account.

serviceAccount:

create: false

name: "myserviceaccount"Configure the Pod Security admission controller

If you use Pod Security Standards in your cluster, you must set up the Checkmk Cluster Collector so that it has unrestricted access in the corresponding namespace. Ideally, create a namespace with the following spec:

apiVersion: v1

kind: Namespace

metadata:

name: checkmk-monitoring

labels:

pod-security.kubernetes.io/enforce: privileged

pod-security.kubernetes.io/enforce-version: latestYou can create the namespace by running, e.g. kubectl apply -f namespace.yaml.

Keep in mind that you then don’t need to use the option --create-namespace when you execute the helm upgrade command later.

If the Cluster Collector is already running or the namespace already exists, you can also set the above labels with the following command:

user@host:~$ kubectl label --overwrite ns checkmk-monitoring pod-security.kubernetes.io/enforce=privileged pod-security.kubernetes.io/enforce-version=latestPod Security Policies and Network Policies

The PodSecurityPolicy (PSP for short) and NetworkPolicy policies are included in our Helm chart primarily for compatibility reasons.

Since PSP have now been removed entirely from Kubernetes as of v1.25, we have disabled them by default as of version 1.3.0 of our Helm chart.

The corresponding section now looks like this:

rbac:

pspEnabled: falseIf you still use the PSP in your cluster, it is necessary to set this option to true in the values.yaml:

rbac:

pspEnabled: trueIf — at a later time — we find that this entry is not processed correctly even when disabled, we will remove it entirely.

The same applies to the NetworkPolicy.

If you use this in your cluster, you will need to change the location in values.yaml from enabled: false to enabled: true.

In this situation, please refer to the following documentation within values.yaml to configure the NetworkPolicy correctly.

## ref: https://kubernetes.io/docs/concepts/services-networking/network-policies/

networkPolicy:

# keep in mind: your cluster network plugin has to support NetworkPolicies, otherwise they won't have any effect

enabled: false

# specify ipBlock cidrs here to allow ingress to the cluster-collector

# this is required for the checkmk servers to be able to scrape data from checkmk, so include the resprective ip range(s) here

allowIngressFromCIDRs: []

# - 127.0.0.1/32 # e.g. Checkmk Server

# - 127.0.0.1/24 # e.g. Metallb speakers

# the cluster-collector needs to be able to contact the kube-apiserver

# you have three options here to choose from, depending on your cluster setup:

# 1) if your apiserver resides outside the cluster, resp. you have a kubernetes endpoint available (check via "kubectl -n default get ep kubernetes")

# we can make use of helm lookup to automatically inject the endpoińt (cidr + port) here.

# This is the most comfortable one, just note that helm lookup won't work on a "helm template" or "helm diff" command.

# (see also: https://helm.sh/docs/chart_template_guide/functions_and_pipelines/#using-the-lookup-function)

# 2) similar to 1) you can also specify the "ipBlockCidr" directly. Make sure to disable "enableCidrLookup", and also fill the "port".

# 3) if the apiserver resides inside the cluster, disable "enableCidrLookup", unset "ipBlockCidr", and fill the "labelSelectors" section

# with the name of the namespace where the kube-apiserver is availabe, and the label key and label value that defines your kube-apiserver pod.

egressKubeApiserver:

enableCidrLookup: true

# ipBlockCidr: 172.31.0.3/32

# port: 6443

# labelSelectors:

# namespace: kube-system

# key: app

# value: kube-apiserver2.3. Installing Helm charts

After customizing values.yaml or creating your own, use the following command to install all of the necessary components into your cluster to be able to monitor it in Checkmk:

user@host:~$ helm upgrade --install --create-namespace -n checkmk-monitoring myrelease checkmk-chart/checkmk -f values.yamlSince this command is not self-explanatory, we provide an explanation of the individual options below:

| Command element | Description |

|---|---|

|

This part is the basic command to send the configuration to the Kubernetes cluster. |

|

In Kubernetes, you always specify to which namespace the configuration should be added. You need this option if the namespace does not yet exist. Helm will create it in this case. |

|

This option specifies the namespace to which the configuration should be added. |

|

Here, |

|

The first part of this option describes the repository you previously created. The second part — after the slash — is the package that contains the information necessary to create the configuration for your Kubernetes monitoring. |

|

Finally, enter the configuration file that you created or adapted earlier. It contains all of the customizations to be included in the configuration files created with |

Once you have executed the command, your Kubernetes cluster is prepared for monitoring with Checkmk. The cluster will now take care of itself to ensure that the necessary pods and the containers they contain are running and accessible.

Helm chart output

So the next thing to do is to set it up in Checkmk.

To make this setup as easy as possible, we have equipped the output from our Helm charts with a whole series of commands.

This output also automatically conforms to the values you specified in the values.yaml file.

If you use the NodePort, you will get the commands to display the NodePort’s IP and port, among other things.

If instead you use Ingress, the output will be adapted accordingly.

In the following we show the — slightly abbreviated — output following a successful installation when using the NodePort:

user@host:~$ helm upgrade --install --create-namespace -n checkmk-monitoring myrelease checkmk-chart/checkmk -f values.yaml Release "myrelease" has been upgraded. Happy Helming! NAME: myrelease LAST DEPLOYED: Sat Dec 16 19:00:11 2022 NAMESPACE: checkmk-monitoring STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: You can access the checkmk: myrelease-checkmk-cluster-collector.checkmk-monitoring With the token of the service account namedcluster-collectorvia: NodePort: export NODE_PORT=$(kubectl get --namespace checkmk-monitoring -o jsonpath="{.spec.ports[0].nodePort}" services myrelease-checkmk-cluster-collector); export NODE_IP=$(kubectl get nodes --namespace checkmk-monitoring -o jsonpath="{.items[0].status.addresses[0].address}"); echo http://$NODE_IP:$NODE_PORT # Cluster-internal DNS ofcluster-collectormyrelease-checkmk-checkmkin the namespacecheckmk-monitoringyou can now issue queries against thecluster-collector. Run the following to fetch its token and the ca-certificate of the cluster: export TOKEN=$(kubectl get secret myrelease-checkmk-checkmk -n checkmk-monitoring -o=jsonpath='{.data.token}' | base64 --decode); export CA_CRT="$(kubectl get secret myrelease-checkmk-checkmk -n checkmk-monitoring -o=jsonpath='{.data.ca\.crt}' | base64 --decode)"; # Note: Quote the variable when echo'ing to preserve proper line breaks:echo "$CA_CRT" To test access you can run: curl -H "Authorization: Bearer $TOKEN" http://$NODE_IP:$NODE_PORT/metadata | jq

From this output, simply copy the colored lines and execute the commands.

The first block shows you information about the NodePort:

user@host:~$ export NODE_PORT=$(kubectl get --namespace checkmk-monitoring -o jsonpath="{.spec.ports[0].nodePort}" services myrelease-checkmk-cluster-collector);

user@host:~$ export NODE_IP=$(kubectl get nodes --namespace checkmk-monitoring -o jsonpath="{.items[0].status.addresses[0].address}");

user@host:~$ echo http://$NODE_IP:$NODE_PORT

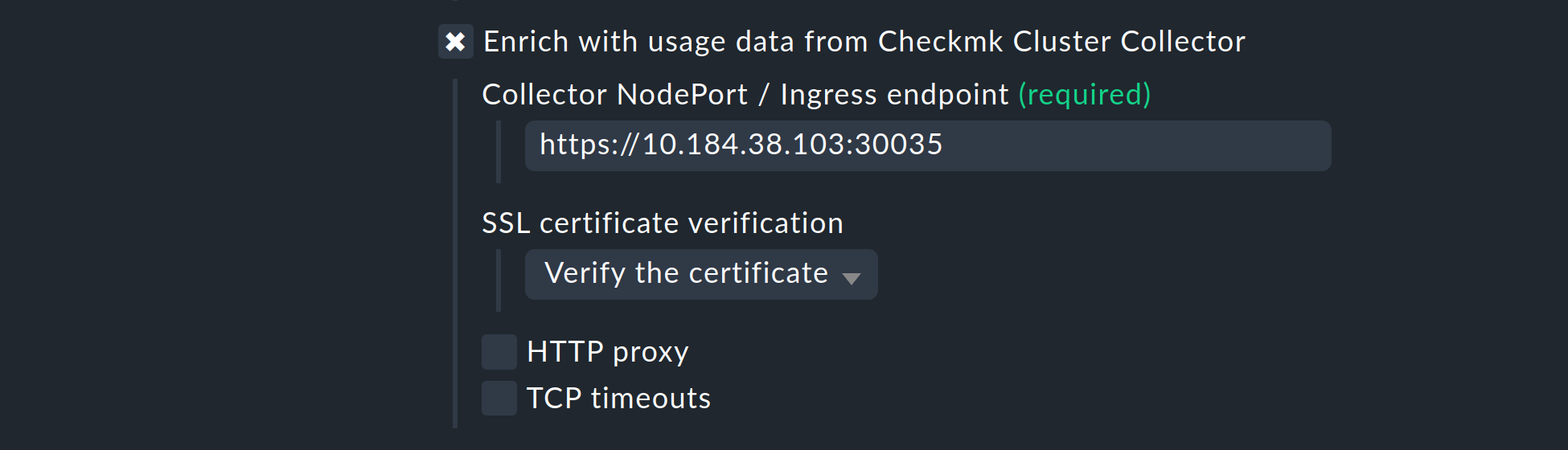

http://10.184.38.103:30035This is exactly the address you need to enter in Checkmk later in the Kubernetes rule in the Collector NodePort / Ingress endpoint field.

With the commands from the next block you get both the token and the certificate for the service account.

The data is thus stored in the TOKEN and CA_CRT environment variables.

When outputting the CA_CRT variable, be sure to enclose it in inverted commas, otherwise the important line breaks in the certificate will be lost.

user@host:~$ export TOKEN=$(kubectl get secret myrelease-checkmk-checkmk -n checkmk-monitoring -o=jsonpath='{.data.token}' | base64 --decode);

user@host:~$ export CA_CRT="$(kubectl get secret myrelease-checkmk-checkmk -n checkmk-monitoring -o=jsonpath='{.data.ca\.crt}' | base64 --decode)";

user@host:~$ echo $TOKEN

eyJhbGciOiJSUzI1NiIsImtpZCI6InR6VXhGSU ...

user@host:~$ echo "$CA_CRT"

-----BEGIN CERTIFICATE-----

MIIBdjCCAR2gAwIBAgIBADAKBggqhkjOPQQDAjAjMSEwHwYDVQQDDBhrM3Mtc2Vy

dmVyLWNhQDE2NjIxNDc5NTMwHhcNMjIwOTAyMTk0NTUzWhcNMzIwODMwMTk0NTUz

...

-----END CERTIFICATE-----When setting up in Checkmk, you must store both the token and the the certificate. Leave the shell open with this information or copy the token and certificate to a location you can access during the following setup in Checkmk.

If you have executed the previous two export commands, you can use the last command to verify that the setup was successful:

curl -H "Authorization: Bearer $TOKEN" http://$NODE_IP:$NODE_PORT/metadata | jq

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1815 100 1815 0 0 126k 0 --:--:-- --:--:-- --:--:-- 126k

{

"cluster_collector_metadata": {

"node": "mynode",

"host_name": "myrelease-checkmk-cluster-collector-58f97df9c9-mdhsw",

"container_platform": {

"os_name": "alpine",

"os_version": "3.15.4",

"python_version": "3.10.4",

"python_compiler": "GCC 10.3.1 20211027"

},

"checkmk_kube_agent": {

"project_version": "1.0.1"

}

}

...At the beginning of the highly abbreviated output, for example, you can see the Cluster Collector’s version. Further down, metadata for all of the nodes in this cluster would follow.

3. Setting up the monitoring in Checkmk

Next, in Checkmk’s GUI, we move on to setting up the special agent and a rule for automatically creating hosts for your Kubernetes objects. To set up the special agent, however, a few prerequisites must first be met:

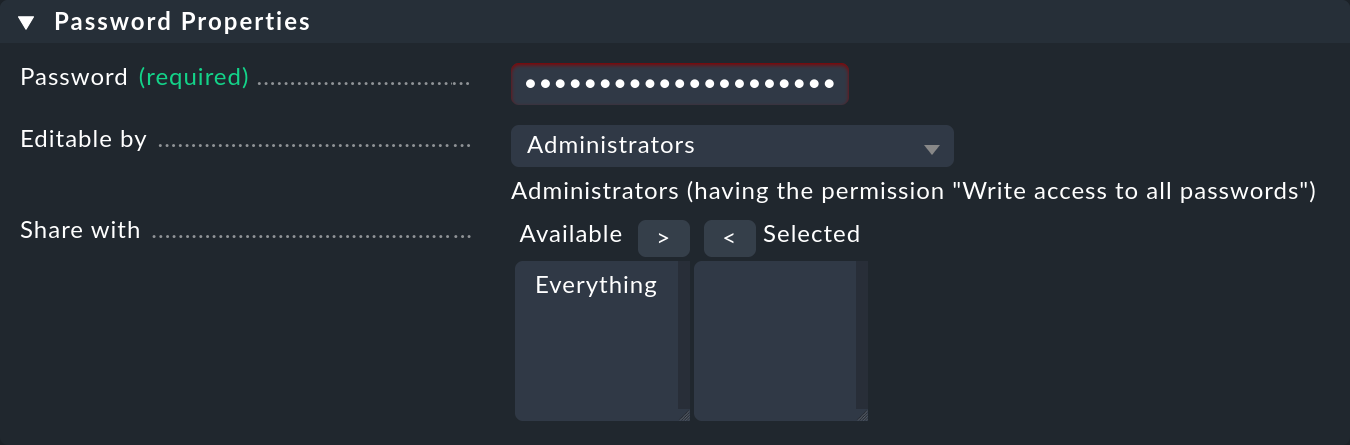

3.1. Storing the password (token) in Checkmk

It is best to store the password (token) for the service account in the Checkmk password store.

This is the safest option, as you can separate the storage and use of the password organizationally.

Alternatively, enter the password directly in plain text when creating the rule (see below).

For information on how to display the required password, see the Helm chart output.

Add the password to the Checkmk password store with Setup > General > Passwords > Add password e.g. under the ID and the title My Kubernetes Token:

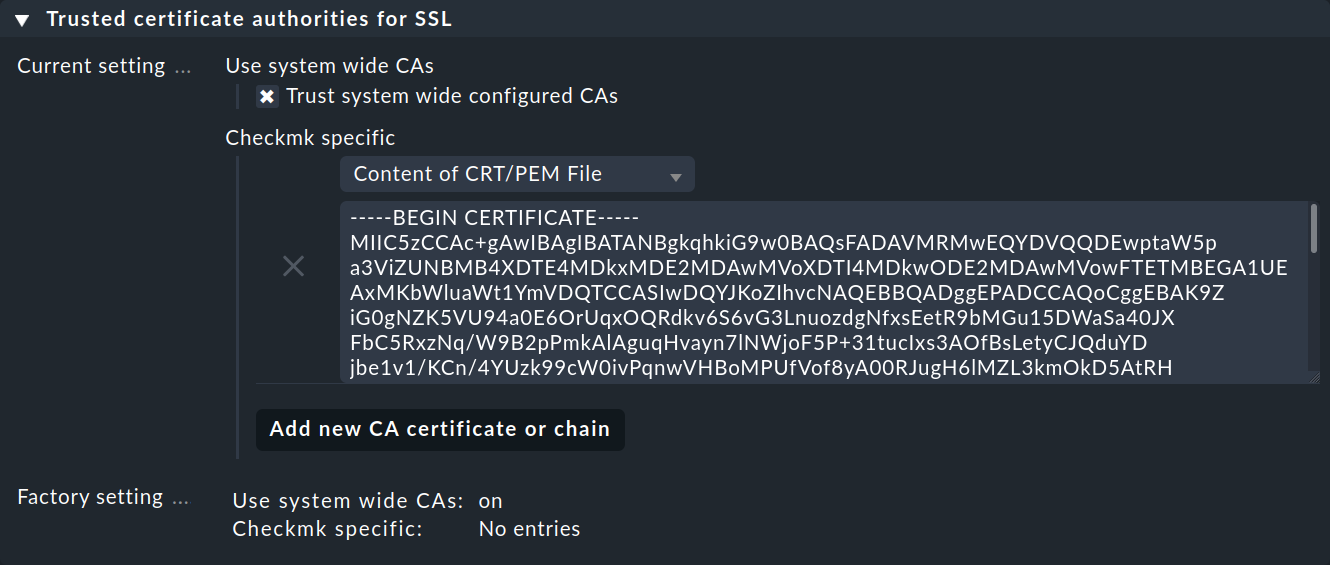

3.2. Importing a service account’s CA certificate into Checkmk.

In order for Checkmk to trust the service account’s Certificate Authority (CA), you must store the CA certificate in Checkmk.

How to display the required certificate is also in the Helm chart output.

Copy everything here including the lines BEGIN CERTIFICATE and END CERTIFICATE and add the certificate in the Setup menu under Setup > General > Global settings > Site management > Trusted certificate authorities for SSL:

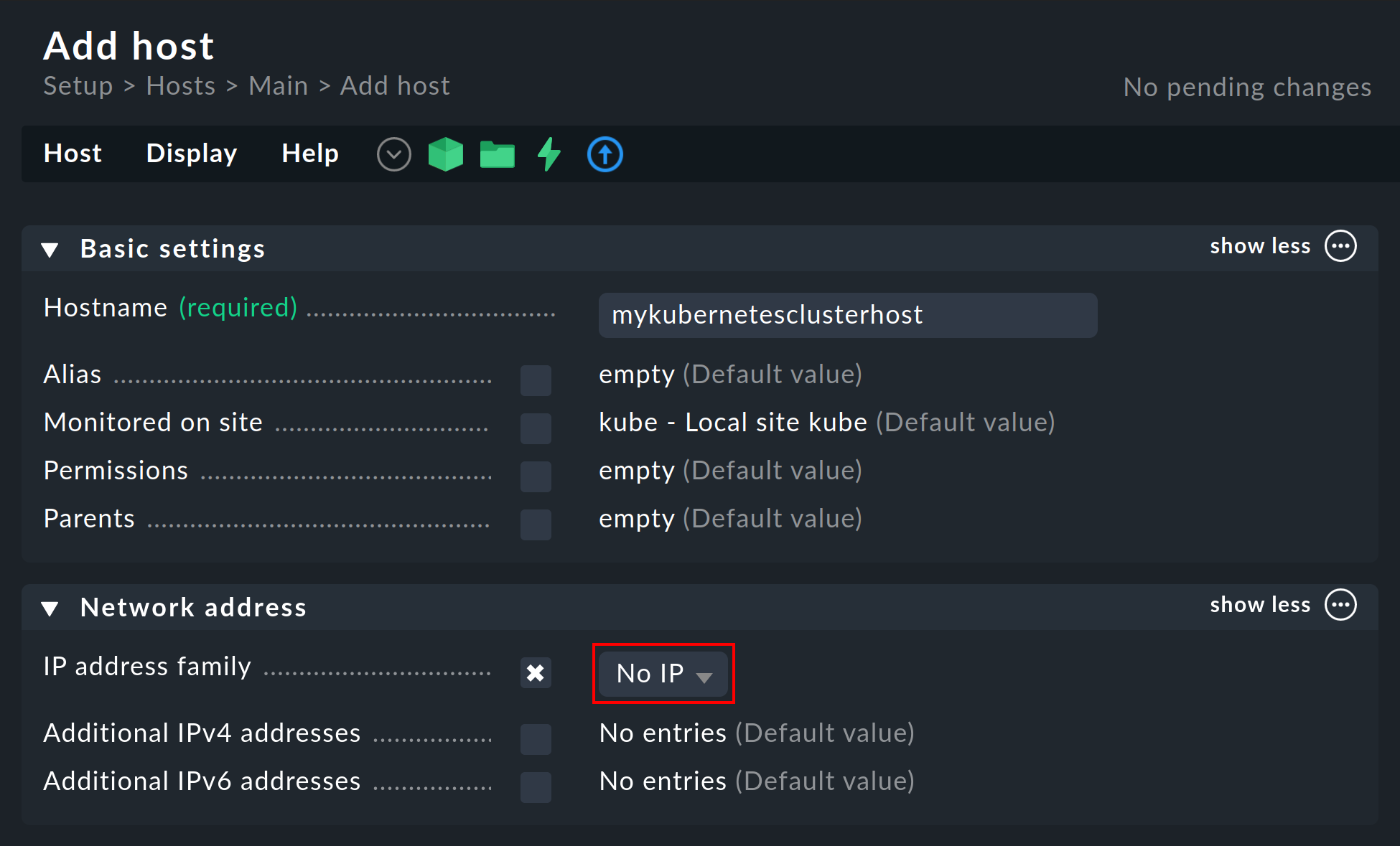

3.3. Creating a Piggyback source host

Create a new host in Checkmk in the usual way and name it mykubernetesclusterhost for example.

As the title and host name suggest, this host is used to collect the piggyback data and also to map all services and metrics at the cluster level.

Since this host only receives data via the special agent, be sure to set the IP address family option to No IP in the host’s properties.

3.4. Setting up dynamic host configuration

To ensure separation between the objects of different Kubernetes clusters, it can be useful to create a folder per cluster via Setup > Hosts > Add folder, in which the dynamic host configuration can automatically create all hosts of a cluster.

However, creating or using such a folder is optional.

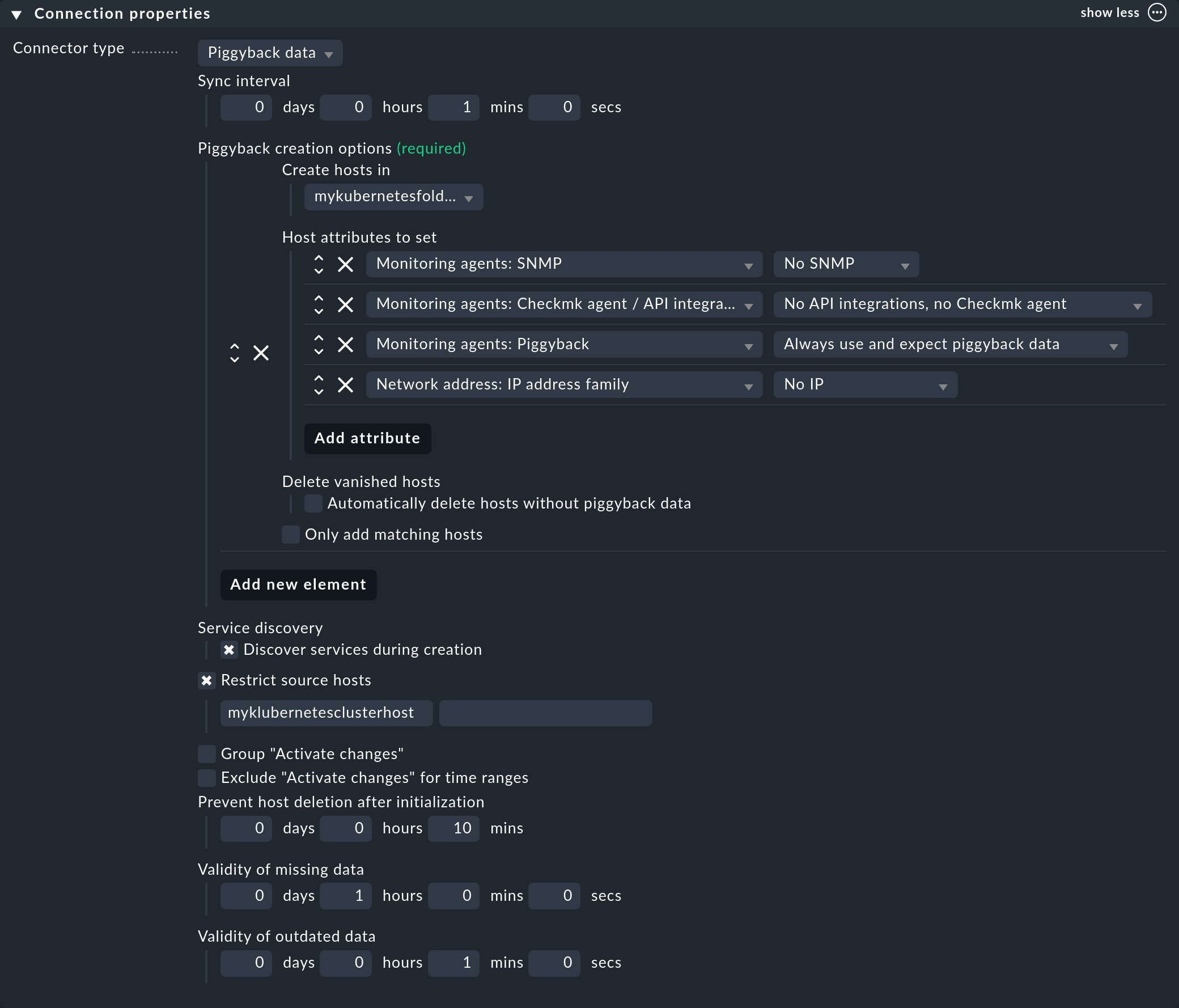

Next, set up a connector in the Checkmk Enterprise Editions for the incoming piggyback data:

with Setup > Hosts > Dynamic host management > Add connection.

First enter a title and then click on show more under Connection Properties.

Next, click Add new element and select the previously created folder under Create hosts in.

Leave the default attributes under Host attributes to set as they are. They ensure that Checkmk only adheres to the piggyback data for the automatically created hosts and does not attempt to ping them or reach them via SNMP, for example.

In a Kubernetes environment where monitorable and monitored objects come and go continuously, it is also recommended to activate the option Automatically delete hosts without piggyback data. What exactly this option does and under which circumstances hosts are then actually deleted is explained in the chapter Automatic deletion of hosts in the article on dynamic host configuration.

Now enter the previously created Piggyback source host under Restrict source hosts and activate the option Discover services during creation.

The Connection Properties section of this new connector could then look like this:

3.5. Processing piggyback data in the Raw Edition

In the Raw Edition of Checkmk you will need to create the hosts for the accumulating piggyback data manually.

Because a large number of piggyback hosts are likely to arise here in a Kubernetes cluster, we recommend using our find_piggy_orphans script in your Checkmk site’s ~/share/doc/check_mk/treasures/ directory.

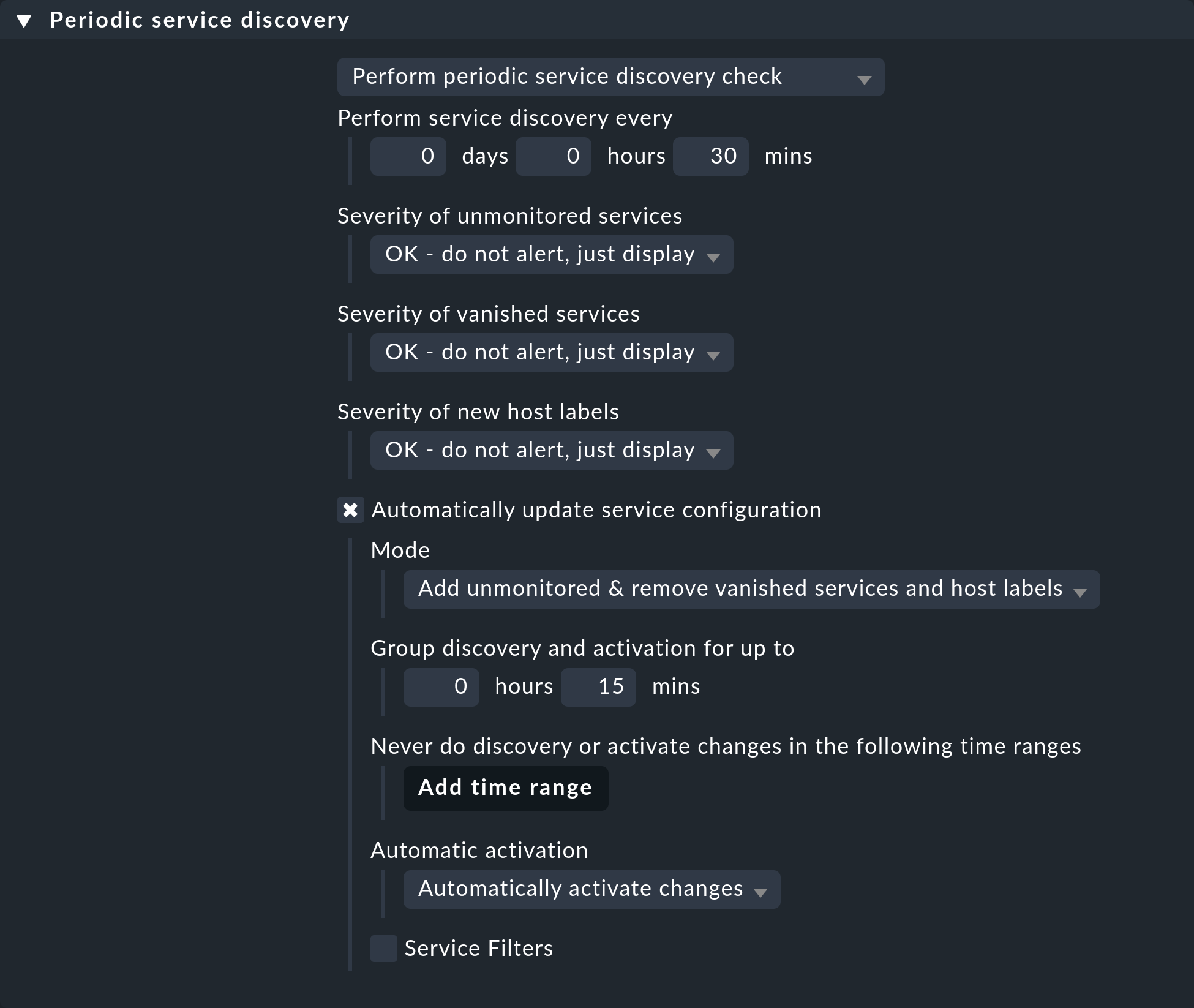

3.6. Customizing the periodic service discovery

By default, Checkmk performs a service discovery every two hours and displays the result from this discovery in the Check_MK Discovery service.

You can find this setting in the Periodic service discovery rule set.

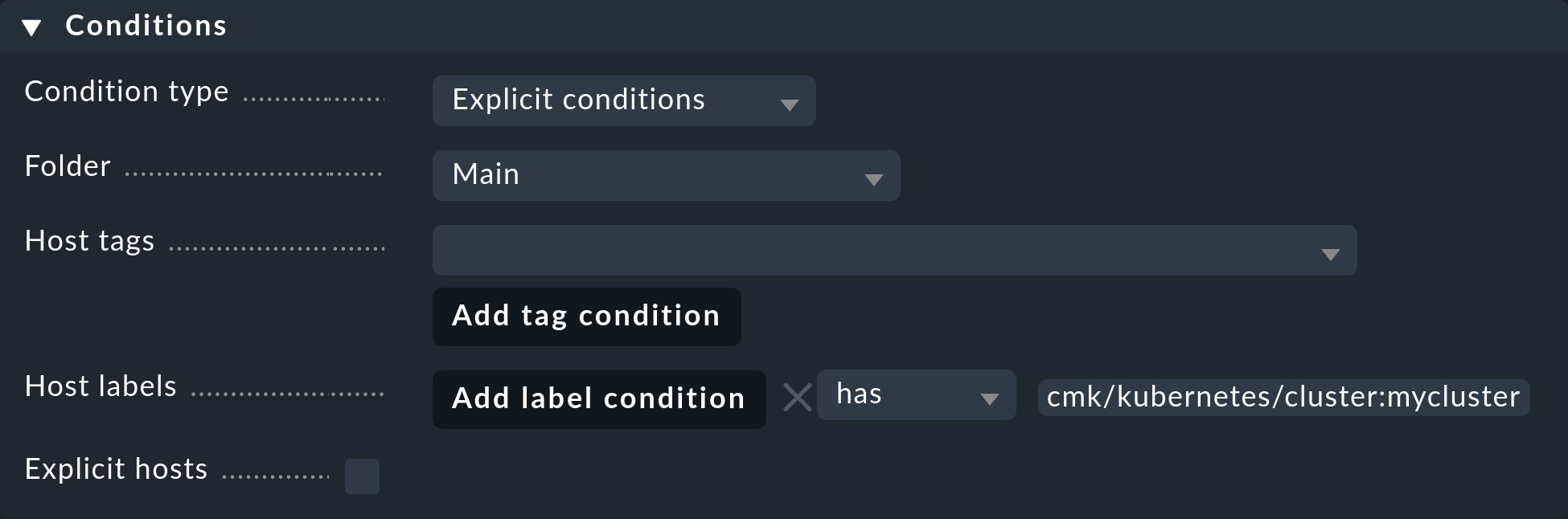

In the context of Kubernetes, we recommend creating a rule for all hosts with the label cmk/kubernetes:yes.

This label is automatically given by Checkmk to every host that represents Kubernetes objects.

You should select a shorter interval for the service discovery here, and also activate the Automatically update service configuration option.

The settings in the following screenshot are only examples.

You will need to decide what makes sense for your clusters on a case-by-case basis.

To restrict this rule to all of your cluster’s hosts, it is sufficient to enter cmk/kubernetes:yes in the Conditions under Host labels.

However, if you want to create individual rules for multiple clusters, simply use the respective cluster-specific label here.

These labels always have the form cmk/kubernetes/cluster:mycluster.

3.7. Setting up the special agent

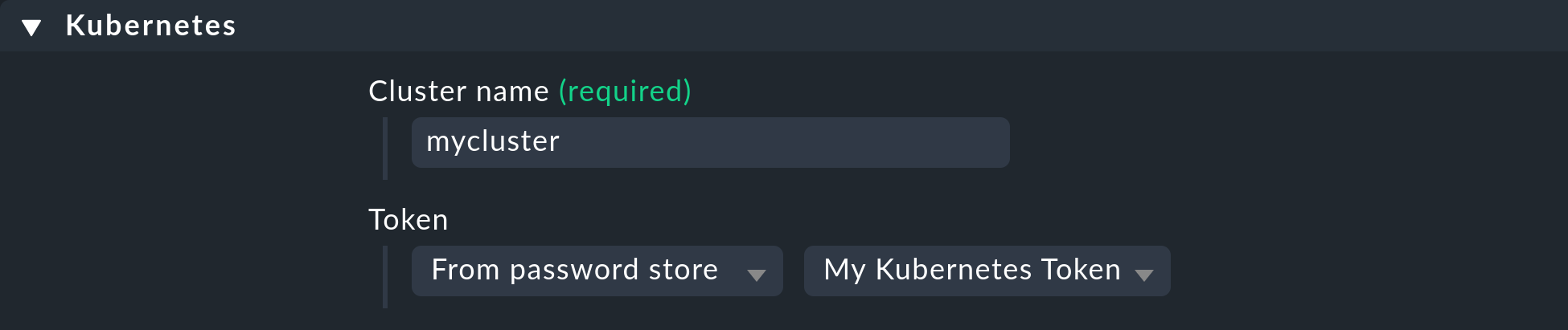

Now that all the prerequisites have been created in the cluster and in Checkmk, you can turn your attention to the configuration of the special agent. You can find this via Setup > Agents > VM, Cloud, Container > Kubernetes. Create a new rule with Add rule.

First of all, you must assign a name for the cluster to be monitored.

You can choose this name freely.

It is used to give a unique name to all objects that come from this particular cluster.

For example, if you enter mycluster here, the names of the hosts of all pods from this cluster will later begin with pod_mycluster.

The next part of the host name will then always be the namespace in which this Kubernetes object exists.

The host name of a pod could then be pod_mycluster_kube-system_svclb-traefik-8bgw7, for example.

Under Token, now select the previously created entry from the Checkmk password store.

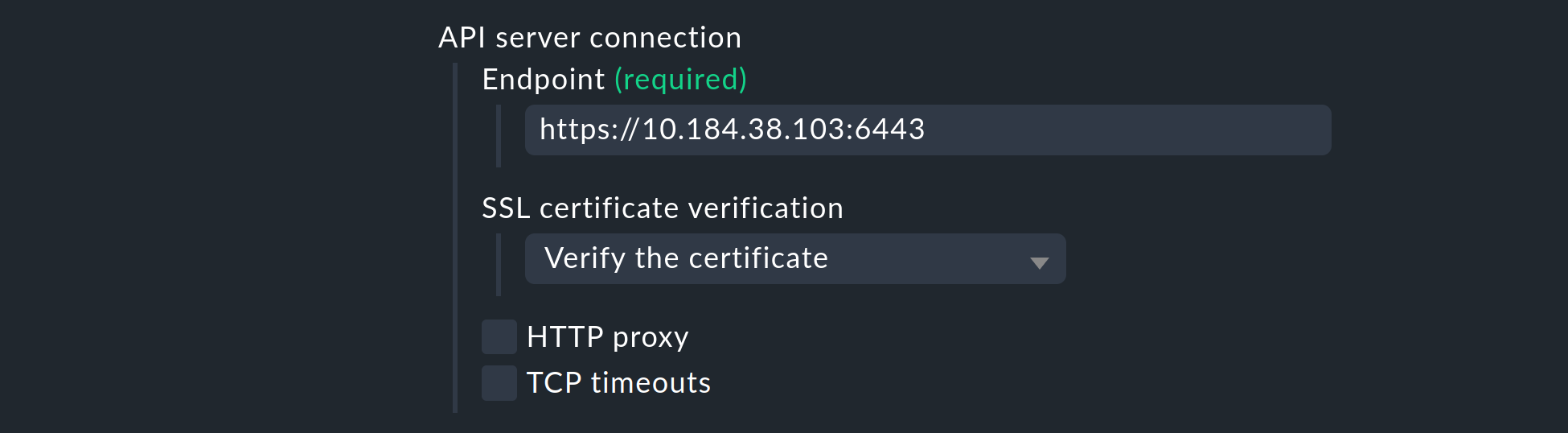

Under API server connection > Endpoint, Checkmk now asks you to enter the URL (or IP address) through which your Kubernetes API server can be accessed.

You only need to enter the port if the service is not provided via a virtual host.

The easiest way to find out this address — if you don’t already have it at hand — will depend on your Kubernetes environment.

The following command will give you the API server’s endpoint in the server line:

user@host:~$ kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://DFE7A4191DCEC150F63F9DE2ECA1B407.mi6.eu-central-1.eks.amazonaws.com

name: xyz:aws:eks:eu-central-1:150143619628:cluster/my-kubernetesHowever, the actual output of kubectl config view varies greatly.

If a port is also specified here in the server line, be sure to include it in the rule as well.

If you have so far followed these instructions step by step and have deposited your cluster’s CA certificate — as described above — in Checkmk, select under SSL certificate verification the Verify the certificate entry.

Next, you have the opportunity to enrich the monitoring of your Kubernetes cluster with usage data collected by the Checkmk Cluster Collector. We repeat it once more here to emphasize the importance: The setup of the Cluster Collector is absolutely essential for a fully comprehensive monitoring of your clusters. This is the only way to obtain important data such as CPU and memory utilization and to receive information on the file systems used by the individual components.

So activate the Enrich with usage data from Checkmk Cluster Collector option and specify the endpoint of the NodePort or Ingress. How to re-display this endpoint is in the Helm chart output.

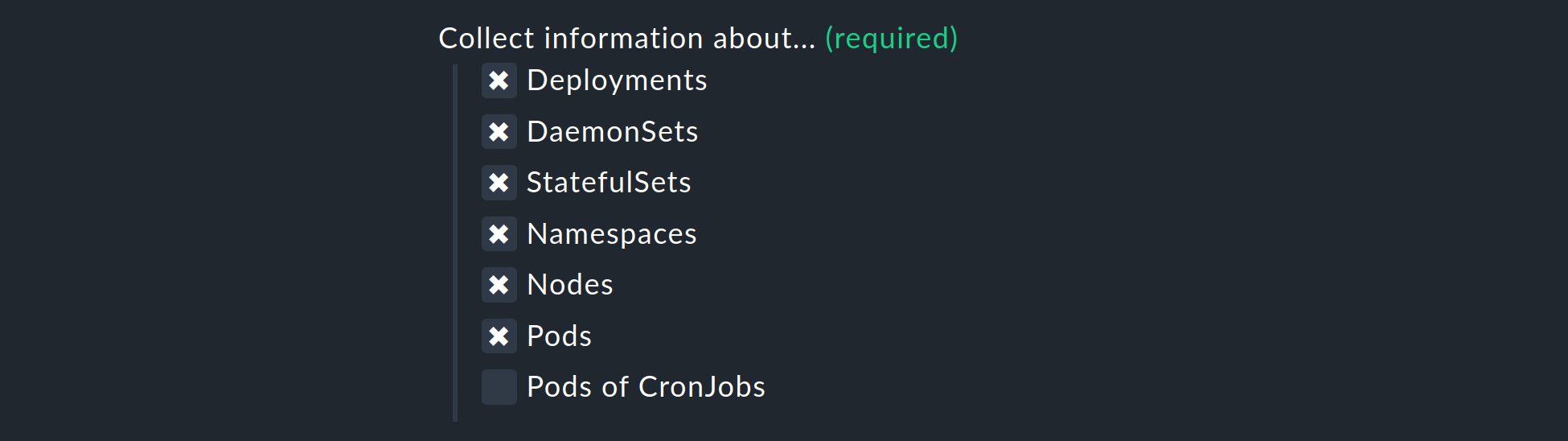

With the Collect information about… options, you can now select which objects within your cluster are to be monitored. Our preselection covers the most relevant objects. If you decide to also monitor the Pods of CronJobs, please refer to the inline help on this point.

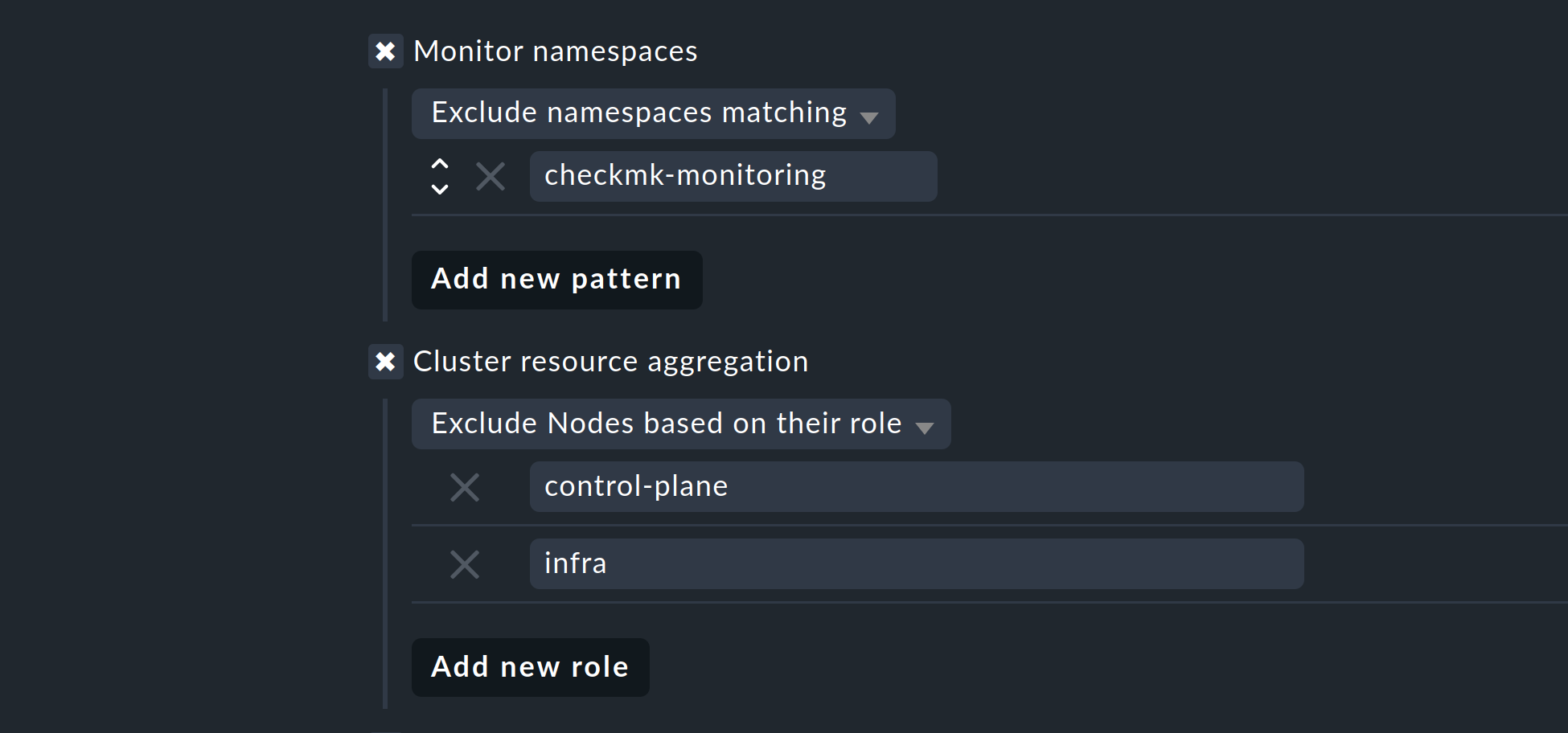

With the next two options, you can further limit the objects to be monitored. If you are only interested in objects from certain namespaces, set this accordingly under Monitor namespaces. Here you can either enter individual namespaces to be monitored or explicitly exclude individual namespaces from monitoring.

With the Cluster resource aggregation option, you can specify nodes that do not provide resources for the workload of your cluster.

These nodes should be excluded from the calculation of available resources, otherwise there is a risk that capacity bottlenecks will not be detected.

By default, we therefore exclude the control-plane and infra nodes from the evaluation.

As a final option, you can import the so-called annotations from Kubernetes. In Checkmk, these annotations become host labels and can thus be used as conditions in rules. You can specify which annotations are to be imported using regular expressions. Again, consult the detailed inline help at this point.

Note: The Import all valid annotations option is provided here only for completeness. We do not recommend blindly importing all annotations, as this can create a very large mountain of useless labels in Checkmk.

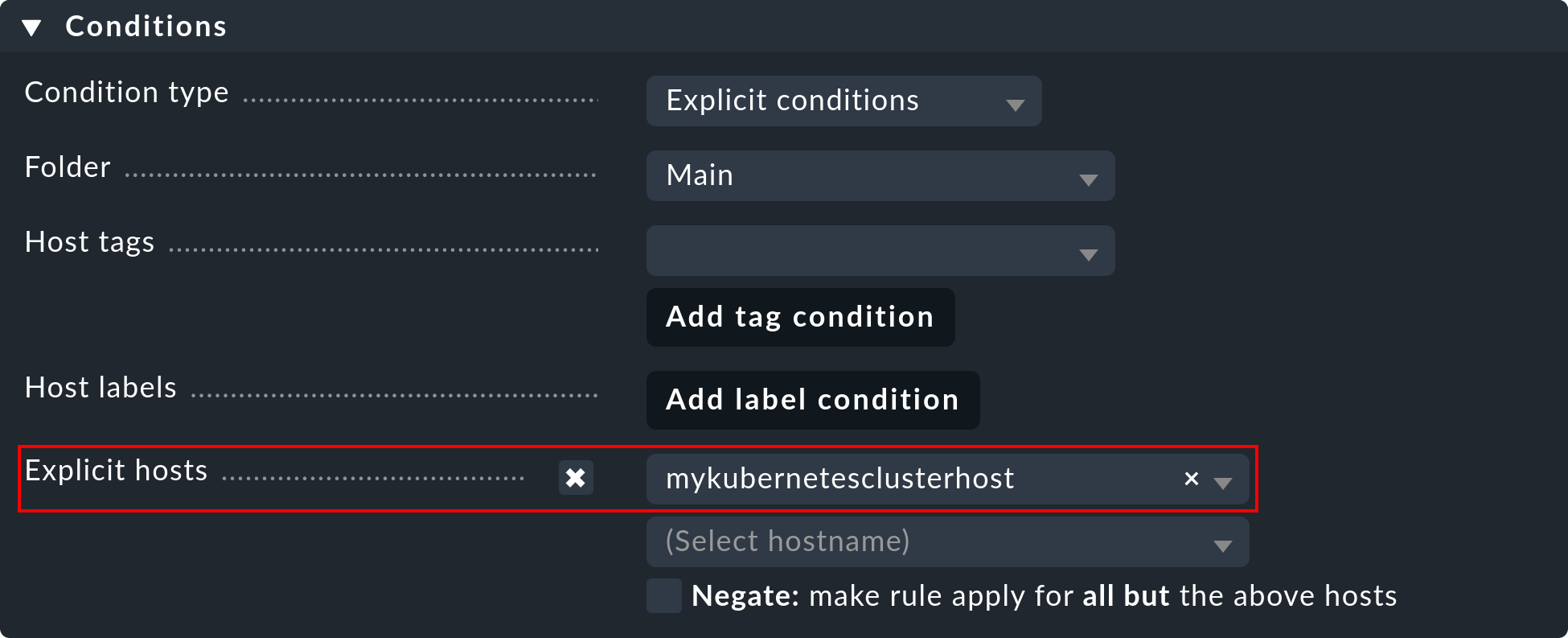

Important: Under Conditions > Explicit hosts you must now enter the previously-created host:

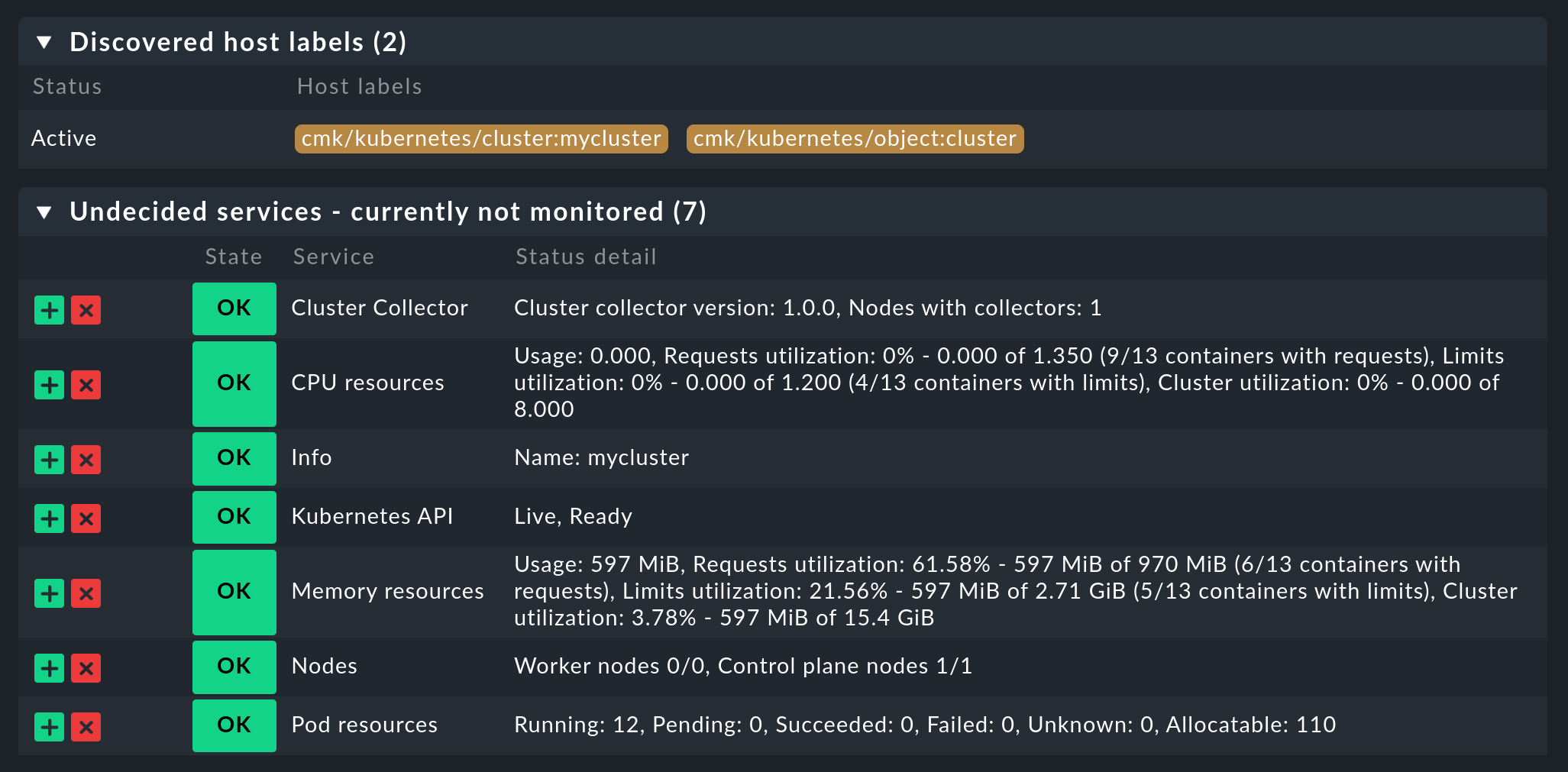

Then save the rule and perform a service discovery on this host. You will immediately see the first cluster-level services here:

Now activate all of the changes you have made and let the dynamic host configuration do the work for you. This will create all hosts for your Kubernetes objects within a short space of time.

4. Labels for Kubernetes objects

Checkmk automatically generates labels for Kubernetes objects such as clusters, deployments or namespaces during a service discovery.

All of the labels for Kubernetes objects that Checkmk automatically generates start with cmk/kubernetes/.

For example, a pod always receives a label for the node (cmk/kubernetes/node:mynode), a label that shows that this object is a pod (cmk/kubernetes/object:pod) and a label for the namespace (cmk/kubernetes/namespace:mynamespace).

This makes it very easy to create filters and rules for all objects of the same type or in the same namespace.

5. Dashboards and views

5.1. Kubernetes dashboards

The Checkmk Enterprise Editions are supplied with six built-in dashboards for Kubernetes.

In order to use these dashboards in a practical way, it is necessary to install and configure our Cluster Collector.

Specifically, these six dashboards are called:

Kubernetes

Kubernetes Cluster

Kubernetes DaemonSet

Kubernetes Deployment

Kubernetes Namespace

Kubernetes StatefulSet

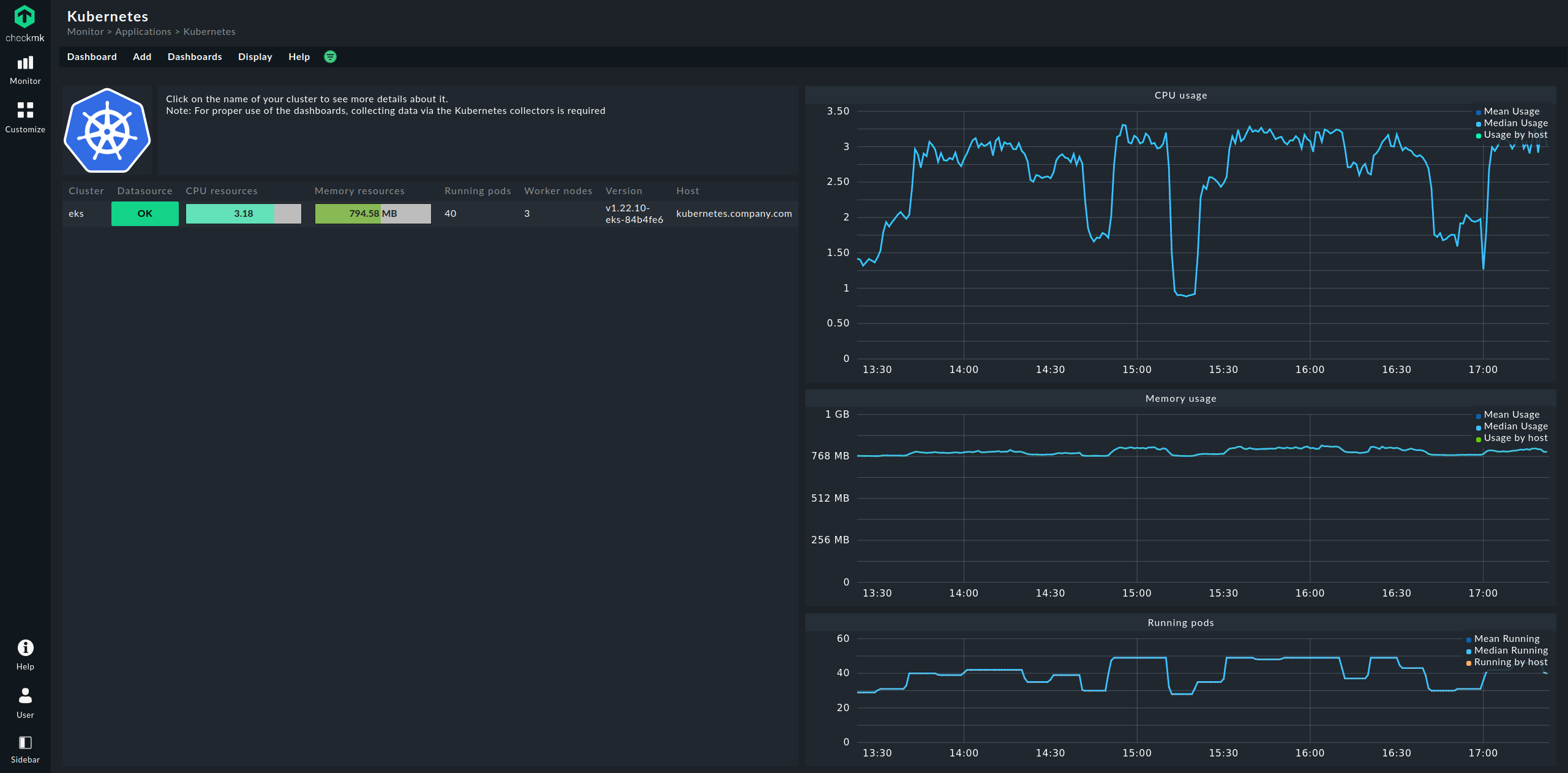

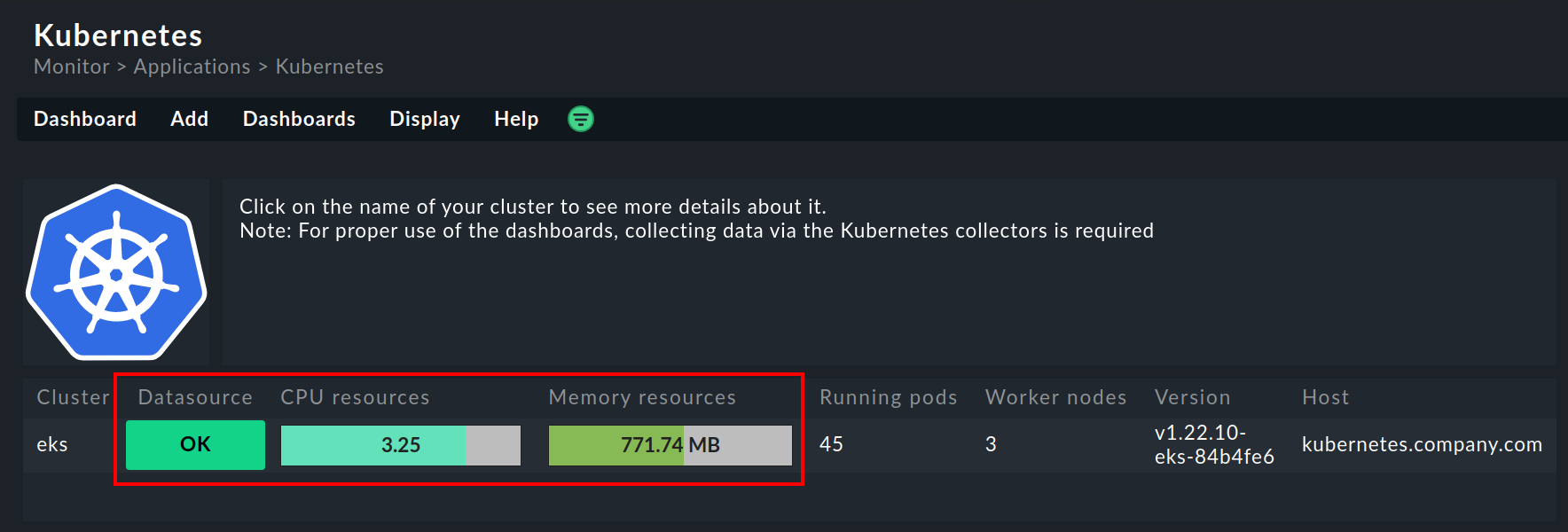

The entry point is always the Kubernetes dashboard, which you can access via Monitor > Applications > Kubernetes:

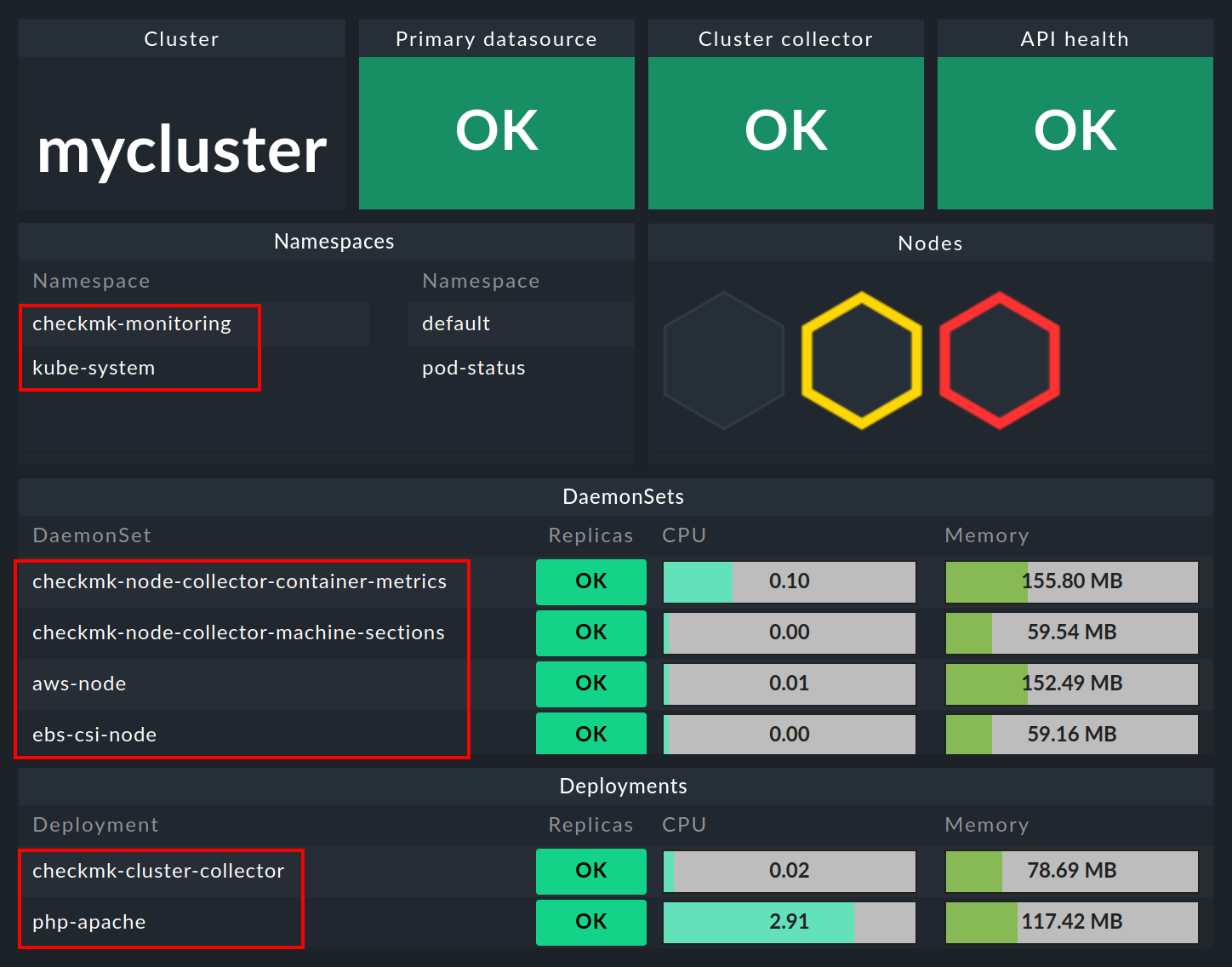

In the Kubernetes dashboard, all of your Kubernetes clusters being monitored will be listed on the left-hand side. This listing of clusters is also your entry point to drill deeper into Kubernetes dashboards. Clicking on the name of a cluster will take you to the selected cluster’s Kubernetes Cluster dashboard. In the Kubernetes Cluster dashboard, clicking on the respective name then takes you to the other context-dependent dashboards:

5.2. The Hardware/Software inventory

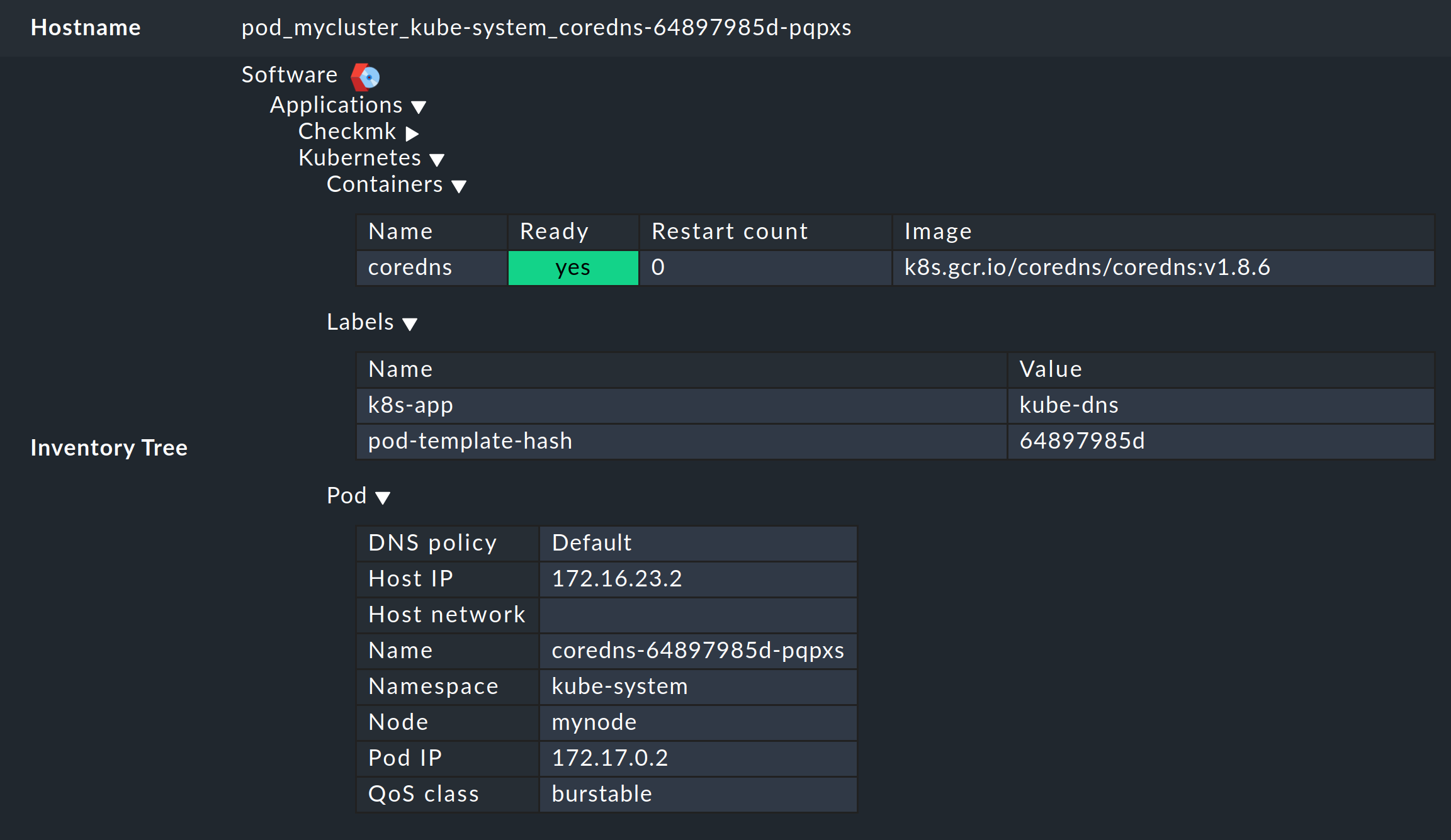

The Checkmk Kubernetes monitoring also supports the HW/SW inventory. For example, if you click on the cluster’s primary name (here: mycluster) in the above cluster dashboard, you will jump to the cluster’s inventory.

In the same way, i.e. via the boxes with the primary names of the objects, you will also get to the inventory for the respective object in the other dashboards. The following example shows the HW/SW inventory from a pod:

6. Checking the installation

In the Helm chart output section, you have already learned the first method for checking that the components for a comprehensive monitoring of Kubernetes have been successfully installed. In the Checkmk GUI you can also check the successful installation and configuration in a number of places.

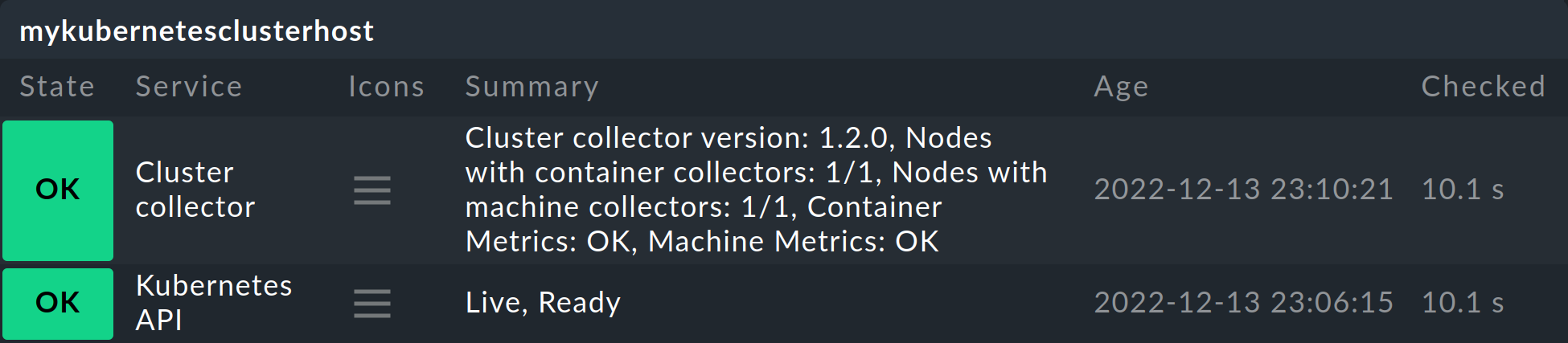

The most important services here are definitely Kubernetes API and Cluster Collector. These must be present on the cluster host you have created and should also display certain information.

The Kubernetes API service should normally report Live, Ready under Summary. The service Cluster Collector must show the version number of the installed Cluster Collector. If this is not the case for one or the other of these, you must check the installation of the Helm charts and the configuration of the special agent.

Further possibilities for checking are provided in the Checkmk Enterprise Editions cluster dashboards.

In the Kubernetes dashboard, you can see very early on whether the Cluster Collector is running in a cluster and collecting data. If the columns CPU resources and Memory resources do not contain any data, this is already a strong indicator that the Cluster Collector is not running properly. If set up correctly, the Kubernetes dashboard should look something like this:

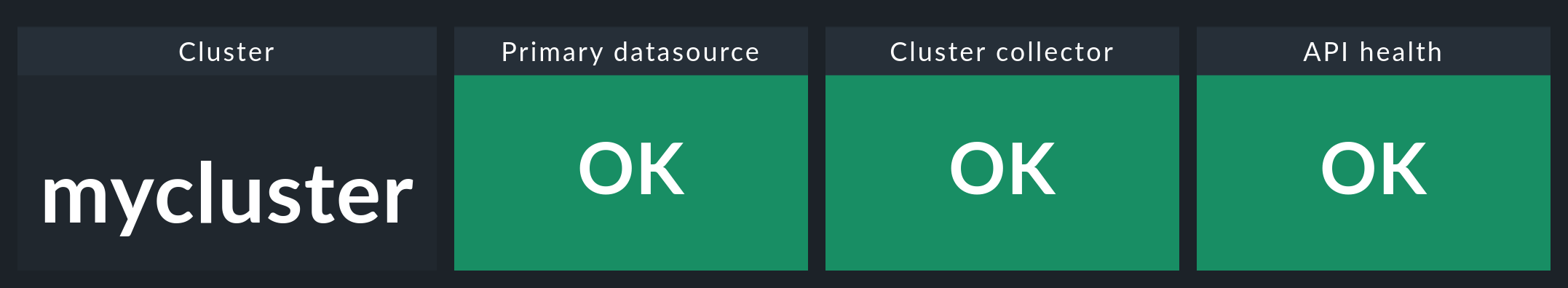

If you now click on the name of the cluster in this window, you will land in the Kubernetes Cluster in the respective cluster’s dashboard. Here the three boxes Primary datasource, Cluster collector and API health should be green and show OK.

7. Removing monitoring components from a cluster

If you have deployed Checkmk to your cluster using our Helm charts, you can remove the created accounts, services, pods and node ports just as easily as you set them up. To do this, simply uninstall the release that was installed using our charts.

If you are unsure of the name of the release, first display all Helm releases in all namespaces:

user@host:~$ helm list --all-namespaces

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

myrelease checkmk-monitoring 1 2022-08-15 19:00:42.318666784 +0200 CEST deployed checkmk-1.0.1 1.0.1As in the example output above, you should find a release here that contains a reference to Checkmk in the CHART column.

Remove this release with the following command, specifying the correct namespace:

user@host:~$ helm uninstall myrelease -n checkmk-monitoring

release "myrelease" uninstalled