1. The Checkmk Micro Core’s special features

In comparison with Nagios, the CMC's most significant advantages are its higher performance and faster reaction times. It has further interesting advantages of which you should be aware — the most important of which are:

Smart Ping — Intelligent host checks

Auxiliary processes Check helper, Checkmk fetcher and Checkmk checker

Initial Scheduling

Processing of performance data

A couple of Nagios' functions are not used, or are achieved using a different procedure in the CMC. The details on this can be found in the article on the migration to the CMC.

2. Smart Ping — Intelligent host checks

With Nagios the availability of hosts is usually verified using a Ping.

For this purpose, for every host a plug-in such as check_ping or check_icmp is executed once per interval (generally once per minute).

This sends, for example, five ping packets and waits for their return.

Creating the processes for executing the plug-ins ties up valuable CPU resources.

Furthermore, these can form a backlog for quite a long time if a host is not reachable, and long timeouts must be endured.

By contrast, CMC runs host checks — unless otherwise configured — using a procedure called Smart Ping.

With this a single ping packet is sent per host per interval.

This is performed by an auxiliary process named icmpsender — directly and without a process creation.

For this purpose the icmpsender has its own ping implementation, which we have developed and which is markedly efficient.

In benchmarking using only ping we could generate network traffic of up to 45 MBit/sec!

The interval for pings per host is set to six seconds by default,

but you can redefine this via the Normal check interval for host checks rule set.

The responses to the pings are not explicitly waited-for.

Incoming ping packets are simply noted as successful checks.

The auxiliary process icmpreceiver is responsible for this.

If no packet is received from a host within a defined time, this host will be flagged as DOWN.

This timeout time is predefined at 15 seconds (2,5 intervals) and can also be altered per host via the Settings for host checks via Smart PING rule set.

2.1. No on-demand host checks

Host checks not only serve to trigger notifications in the case of a total host failure, but also to suppress notifications of service problems during the host’s down time. It is therefore important to quickly and accurately determine a host’s condition in the event of a service problem. Even if it has not been long since the last host check, this result can already be out of date. For this, it is sufficient when directly after a host failure, by chance the service check is executed first, rather than the host check. Even though it is down, the host would still be considered UP and the notification for the service would be sent — erroneously.

The CMC solves this problem very simply:

if during a service problem the host is in an UP state, then the next host check will simply be waited-for.

Due to the interval being very short at only six seconds, there is only a negligible delay to a notification — if the host is still UP and therefore the notification needs to be sent for the service.

The CMC uses an additional trick — the icmpreceiver evaluates not only incoming ping responses, but also packets resulting from TCP connection establishments: SYN (synchronization) and RST (reset).

Keep in mind, that the icmpreceiver will ignore each and every SNMP packet as SNMP does not communicate over the TCP protocol but over UDP.

As an example, let’s take the case of a check_http plug-in delivering a CRIT status, due to a queried webserver being unavailable.

In this situation, following the start of the service check a TCP RST packet (connection refused) will be received from this server.

The CMC therefore knows for certain that the host itself is UP and it can thus send the notification without delay.

The same principle is utilised when calculating network outages if parent hosts have been defined. Here as well notifications will at times be delayed briefly in order to wait for a verified status.

2.2. The advantages

This procedure yields a number of advantages:

Virtually insignificant CPU load resulting from host checks — even without particularly powerful hardware umpteen thousand hosts can be monitored.

No thwarting of monitoring by on-demand host check jams if hosts are DOWN.

No false alarms from services when a host status is not current.

One disadvantage should not be hushed-up however: the Smart Ping host checks generate no performance data (packet runtimes). On hosts where these are required you can simply set up an active check via ping with the Check hosts with PING (ICMP Echo Request rule set.

2.3. Unpingable hosts

In practice, not all hosts are checkable by ping. For these cases other methods in CMC can also be used for the host check, e.g., a TCP connection. Because these are generally exceptions they have no negative impact on the overall performance. The rule set here is Host Check Command.

2.4. Problems with firewalls

There are firewalls that answer TCP connection packets to inaccessible hosts with a TCP RST packet. The trick is that the firewall is not permitted to register itself as the sender of this packet, rather the target host’s IP address must be specified. Smart Ping will view this packet as a sign of life and incorrectly assume that the target host is accessible.

In such a (rare) situation, via Global settings > Monitoring Core > Tuning of Smart PING you have the possibility of activating the Ignore TCP RST packets when determining host state option.

Or, with check_icmp you can select a conventional ping as a host check for the affected hosts.

3. Auxiliary processes

A lesson from the poor performance of Nagios in larger environments is that creating processes is a resource and time consuming operation. The size of the parent process is the decisive factor here. For every execution of an active check the complete Nagios process must first be duplicated (forked) before it is replaced by the new process — the check plug-in. The more hosts and services to be monitored, the larger this process and the fork respectively takes longer. In the meantime the core’s other tasks must wait — and here 24-CPU cores are not much help.

3.1. Check helper

In order to avoid forking the core, during the program start the CMC creates a fixed number of very lean auxiliary processes whose task is to start the active check plug-ins: the check helpers.

Not only do these fork much more quickly, but the forking also scales-up to cover all available cores because the core itself is no longer blocked.

In this way, the execution of active checks (e.g. check_http) — whose runtimes are actually quite short — is greatly accelerated.

3.2. Checkmk fetcher and Checkmk checker

The CMC goes a significant step further however — because in a Checkmk environment active checks are rather an exception. Here Checkmk-based checks — in which only a single fork per host and interval is required — are primarily used.

To optimise the execution of these checks, the CMC maintains two other types of auxiliary processes: the Checkmk fetchers and the Checkmk checkers:

The Checkmk fetchers

retrieve the required information from the monitored hosts, i.e. the data from the Check_MK and Check_MK Discovery services. The fetchers thus take over the network communication with the Checkmk agents, SNMP agents and the special agents. Gathering this information takes some time, but with regularly less than 50 MByte per process only a relatively little memory, so many of these processes can be configured without problems. Please keep in mind the processes are partially or completely not swapable and need to be kept in the physical memory. The limiting factor here is the available memory in the Checkmk server.

Note: The mentioned 50 MByte are an estimate for basic orientation. The actual value may be higher on specific circumstances — e.g. because IPMI has been configured on the Management board.

The Checkmk checkers

analyse and evaluate the information collected by the Checkmk fetchers and generate the check results for the services. The checkers need a lot of memory because they must have the checkmk configuration with them. A checker process occupies at least about 90 MByte — however, a multiple of this may be necessary, depending on how the checks are configured. On the other hand, the checkers do not cause any network load and are very fast in execution. The number of checkers should only be as large as your Checkmk server can process in parallel. As a rule, this number corresponds to the number of cores in your server. Since the checkers are not IO-bound, they are most effective if each checker has its own core.

The division of the two different 'collect' and 'execute' tasks between Checkmk fetcher and Checkmk checker exists since Checkmk version 2.0.0. Before, there was only one auxiliary process type that was responsible for both — the so-called Checkmk helpers.

With the fetcher/checker model, both tasks can now be split between two separate pools of processes: retrieving information from the network with many small fetcher processes and the computationally-intensive checking with a few large checker processes. As a result, a CMC uses up to four times less memory for the same performance (checks per second)!

3.3. Setting the number of auxiliary processes correctly

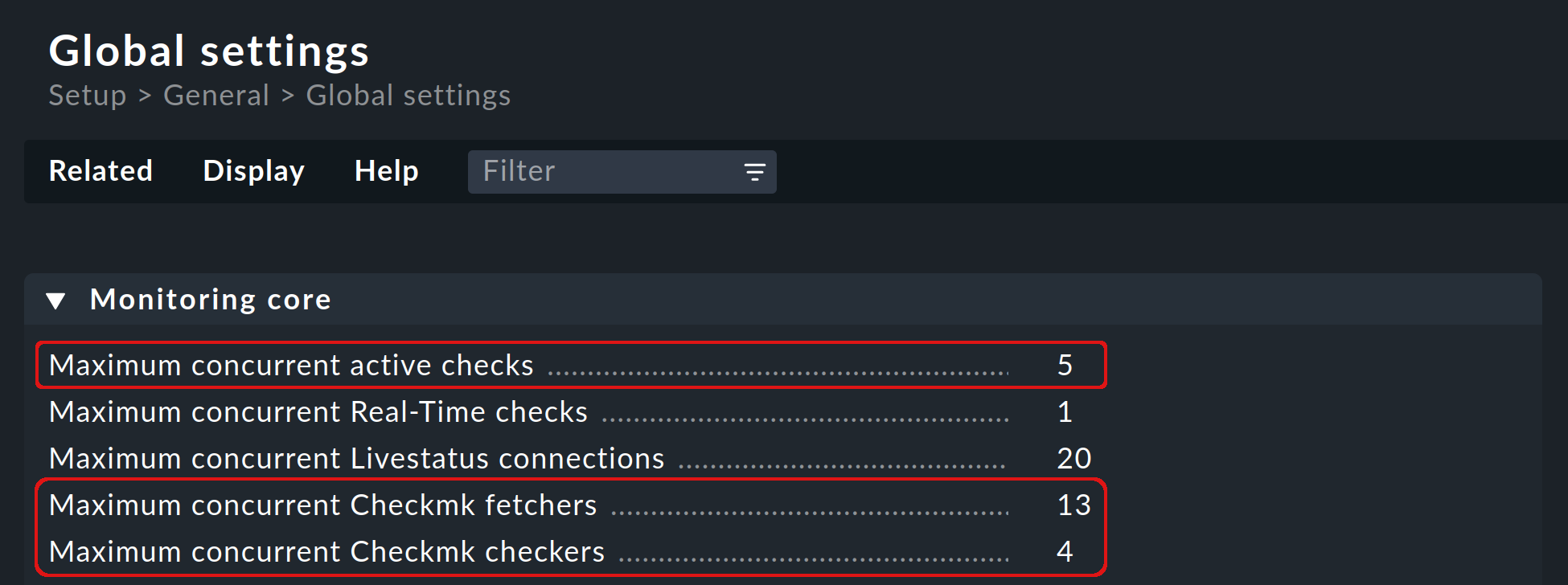

By default, 5 check helpers, 13 Checkmk fetchers and 4 Checkmk checkers are started. These values are set under Global settings > Monitoring Core and can be customized by you:

To find out if and how you need to change the default values, you have several options:

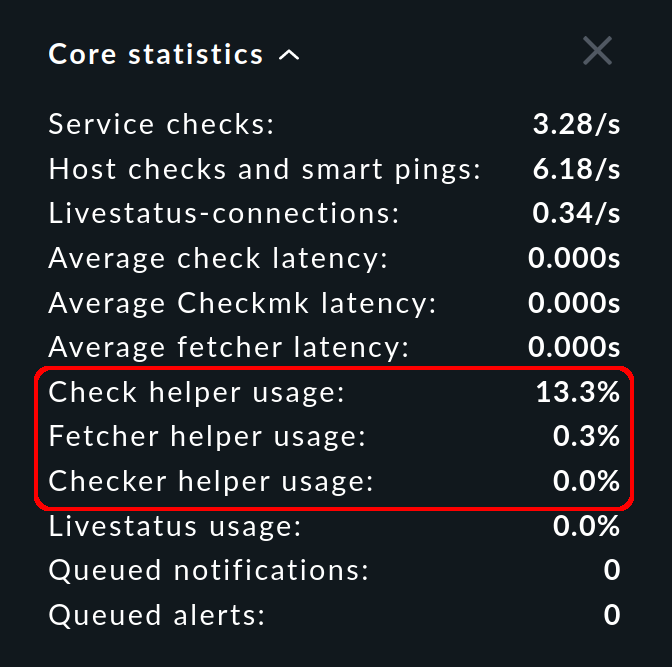

In the sidebar, the Core statistics snapin shows you the percentage utilisation averaged over the last 10-20 seconds:

For all auxiliary process types, there should always be enough processes to run the configured checks. If a pool is being 100% utilised, the checks will not be executed in time, the latency will grow and the states of the services will not be up-to-date.

The usage should not exceed 80% a few minutes after starting a site. For higher percentages, you should increase the number of processes. Since the necessary number of Checkmk fetchers grows with the number of monitored hosts and services, a correction is most likely here. However, be careful to create only as many auxiliary processes as are really needed, as each process occupies resources. In addition, all auxiliary processes are initialised in parallel when the CMC is started, which can lead to load peaks.

The Core statistics snapin shows you not only the usage, but also the latency. For these values, the simple rule applies: the lower, the better — and 0 seconds is therefore best.

Note: You can also display the values shown in the snapin for your site in the details of the OMD <site_name> performance service.

As an alternative to the Core statistics snapin, you can also have your configuration analyzed by Checkmk, with Setup > Maintenance > Analyze configuration. The advantage: Here you get an immediate evaluation from Checkmk how the state of the auxiliary processes is. Very handy is: If one of the auxiliary processes is not OK, you can open from the help text the corresponding Global settings option to change the value.

4. Initial scheduling

During scheduling it is defined which checks should be run at what times. Nagios has implemented numerous procedures that should ensure that the checks are regularly distributed over the interval. It will likewise attempt to distribute the queries to be run on an individual target system uniformly over the interval.

The CMC has its own, simpler procedure for this purpose. This takes into account that Checkmk already contacts a host once per interval. Furthermore, the CMC ensures that new checks are immediately executed and not distributed over several minutes. This is very convenient for the user since a new host will be queried as soon as the configuration has been activated. In order to avoid a large number of new checks causing a load spike, new checks whose number exceeds a definable limit can be distributed over the entire interval. The option for this can be found under Global settings > Monitoring Core > Initial Scheduling.

5. Processing of performance data

An important function of Checkmk is the processing of measurement data, such as CPU utilisation, and its retention for a long time period.

In the Checkmk Raw Edition, PNP4Nagios from Jörg Linge is used for this,

which in turn is based on the RRDtool.

The software performs two functions:

The creation and updating of the Round Robin Databases (RRDs).

The graphic representation of the data in the GUI.

In a Nagios core operation the function mentioned in point 1. above is quite a long process.

Depending on the method, spool files, Perl scripts and an auxiliary process (npcd) written in C is used.

Finally, slightly converted data is written to the RRD cache daemon’s Unixsocket.

The CMC shortens this chain by writing directly to the RRD cache daemon — all intermediate steps are dispensed with. Parsing, and converting the data to the RRDtool’s format is performed directly in C++. This method is possible and sensible nowadays as the RRD cache daemon has already implemented its own very efficient spooling, and with the aid of journal files this means that no data is lost in the case of a system crash.

The advantages:

Reduced Disk-I/O and CPU load

Simpler implementation with markedly more stability

The installation of new RRDs is performed by the CMC with a further helper, activated by cmk --create-rrd.

This creates files optionally compatible with PNP, or with the new Checkmk format (only for new installations).

A switch from Nagios to CMC has no effect on existing RRD files — these will be seamlessly carried-over and will continue to be maintained.

In the Checkmk Enterprise Editions the graphic display of the data in the GUI is handled directly by Checkmk’s GUI itself, so that no PNP4Nagios component is involved.